What have we done?

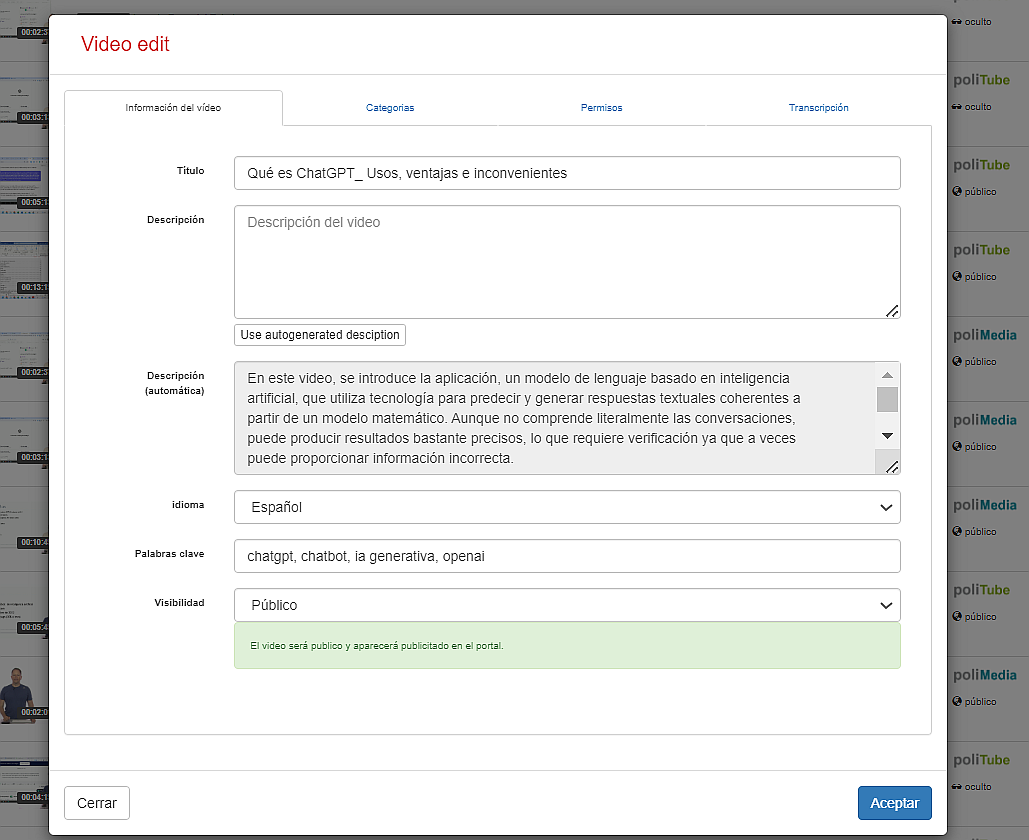

From the OADwe are working on improving the accessibility of digital content created by the UPV. For this purpose, we have generated an automatic description of the contents of each video learning object from the videos hosted at UPV Media.

In the first phase (pilot) we have generated the descriptions of 16,000 videos. We are now initiating the second phase, to complete the rest of the publicly accessible videos at UPV Media.

How did we do it?

The summaries that appear in the description have been made using state-of-the-art models of Generative Artificial Intelligence and developments of research departments of the UPV and ASIC. First the transcription has been extracted automatically. Subsequently the text has been passed to a pre-trained chatbot to improve and summarize the text. We have validated the quality of the automatic descriptions with human supervision and consider the results to be of high quality.